The recent explosion of the internet of things (IoT) and 5G networks have enabled the creation of new cloud-based applications across a wide range of industries. However, many of these applications — think driverless cars or wearable medical devices — require extraordinarily fast processing, low latency, and high bandwidth to operate correctly for end users.

Edge data centers provide a way for content providers to move much of the processing to the edge of the network — or, in other words, closer to the user.

In this article, we will explore exactly what an edge data center is and some of the benefits it affords over traditional centralized data centers.

What Is an Edge Data Center?

An edge data center is a data facility that sits on the outskirts of a broader network. This topology allows the network to serve computing resources physically closer to the end user much in the same way the nervous systems deliver signals throughout the human body.

While this topology and proximity may initially seem like an implementation detail, it’s actually an essential feature of critical IT applications. And that’s because of the two most important performance characteristics for an end user in this type of network: latency and throughput.

Our broad capabilities allow customers to realize the most from their edge infrastructure.

Latency

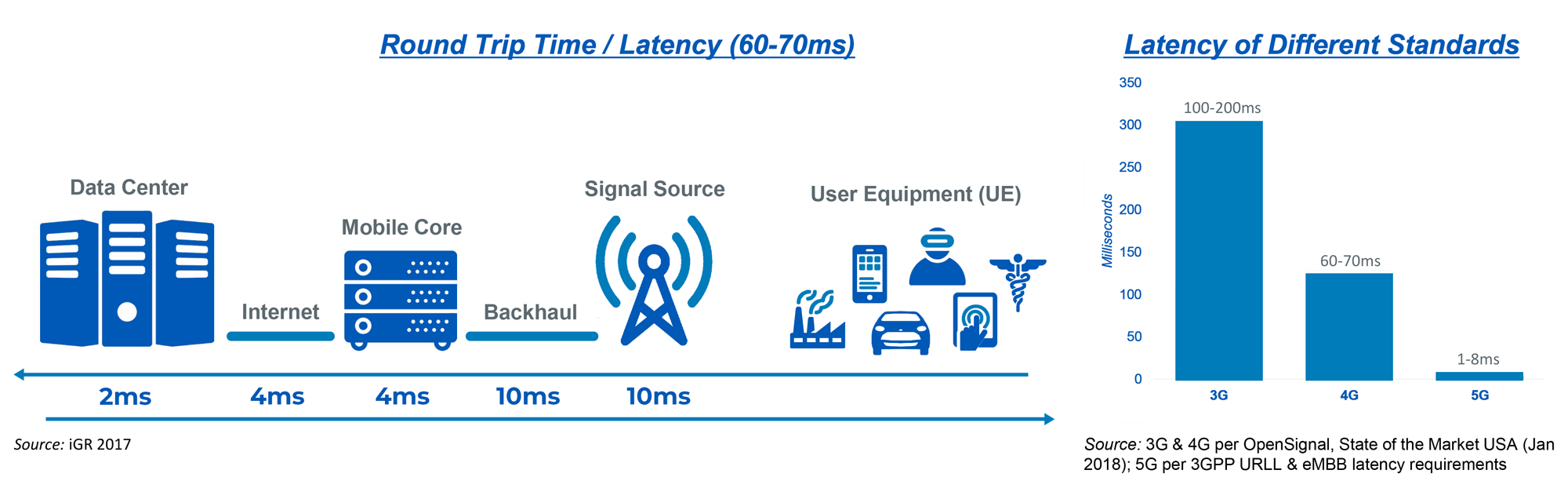

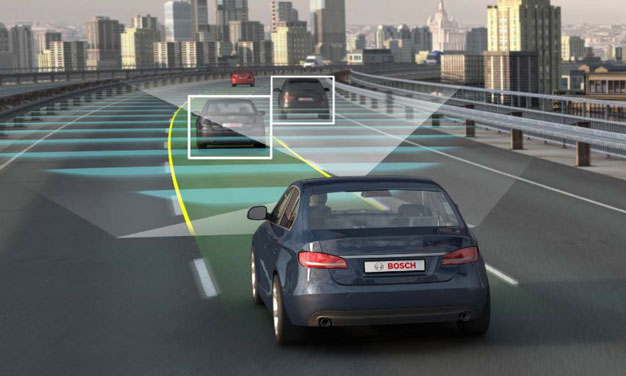

Proximity is an influencing factory for latency for edge data centers. This is problematic for applications like extended reality, connected vehicle technology, and 4K video streaming that rely on processing times of less than 10 milliseconds (see Figure 1). The cause of poor latency is a byproduct of the path in which data travels; traditionally involving multiple “hops” between data center and end user (see Figure 2). These hops create opportunities for slower traffic, and the more hops there are, the greater the overall latency.

Extended Reality

Latency requirement for Augmented Reality (AR) is sub 10ms and sub 5-7ms for Virtual Reality (VR) gaming.

Connected Vehicles

Latency requirement for Connected Autonomous Vehicles (CAVs) is sub 2ms; creating 4TBs of data daily.

Connected Vehicles

Latency requirement for click-to-start 4K video is sub 10ms; with ~3-6x the bandwidth needed for HD.

Figure 1

Figure 2

Throughput (Bandwidth)

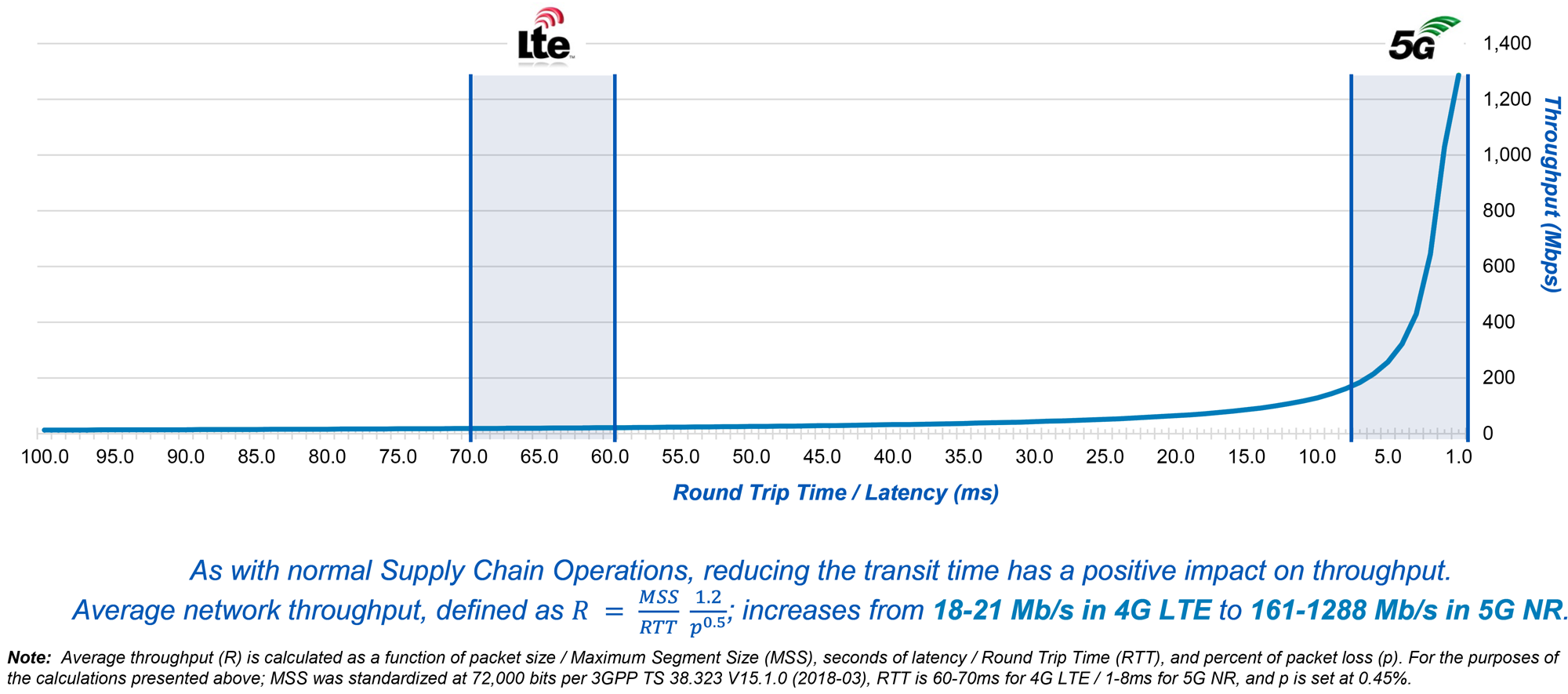

This distance between data center and end user also impacts data throughput, or bandwidth, seen in edge infrastructure. Edge applications such as mobile video and IoT require data in volumes throughput previously unseen prior to the advent of modern 4G/5G mobile technology (See Figure 3).

To understand the impact of throughput on edge computing, we can look to real-world supply chain networks. Think about Amazon and the number of local fulfilment centers they have across the country. Amazon keeps those centers stocked with popular items so that customers don’t have to wait for delivery from, say, a regional warehouse that’s farther away. Because the customer is getting their items from a local fulfilment center, they get them sooner. And even if one fulfilment center doesn’t have their items, there are other fulfilment centers nearby that do. So the customer gets their items quickly, and Amazon can fulfill more orders (i.e. throughput) than if the company only shipped from regional warehouses.

Edge data centers work similarly. When users request data, they’re getting it from an edge center that’s nearby, rather than from a traditional data center that’s farther away. And because edge centers exist in a meshlike system (like Amazon’s web of local fulfilment centers), they’re able to push out a greater volume of data. The takeaway is that for end users to get the greatest throughput to their various devices, the data center they access must be less than 10ms (See Figure 4).

Mobile Video

Mobile video internet traffic is expected to grow tenfold, from 3.7 EB/mo. in 2016 to 33.2 EB/mo in 2021.

Artificial Intelligence

The amount of the analyzed data “touched” by cognitive systems will grow by a factor of 100 to 1.4ZB in 2025.

Internet of Everything

Global M2M traffic is expected to grow more than sevenfold, from 2EB/mo. in 2016 to 14EB/mo. in 2021.

Figure 3

Figure 4

Characteristics of an Edge Data Center

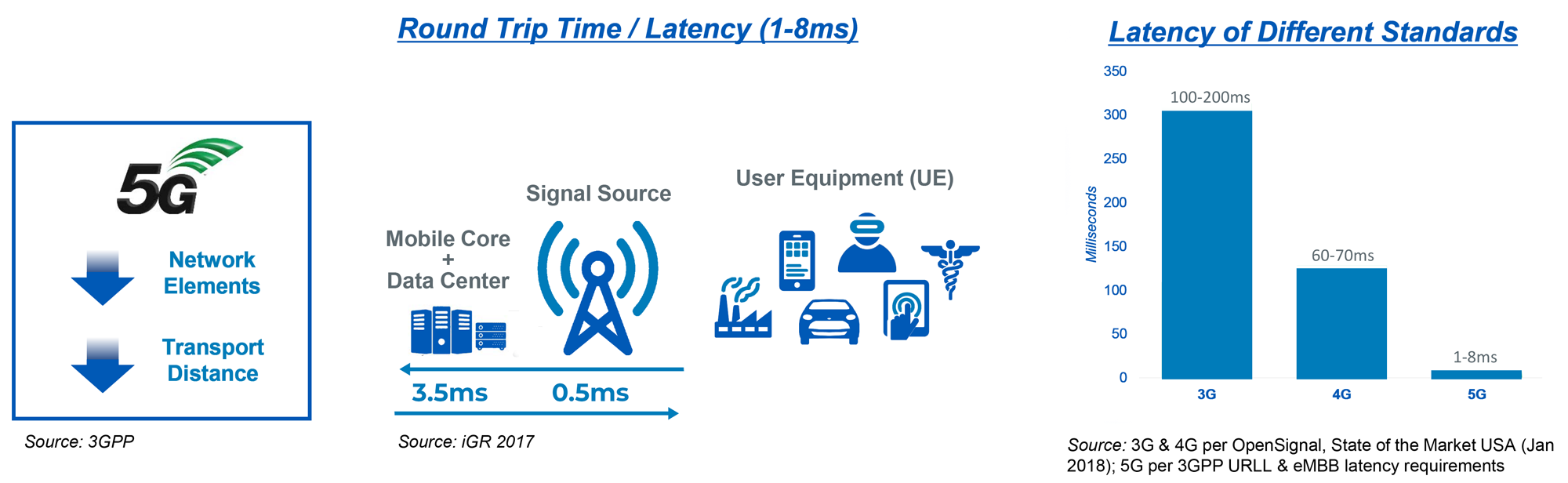

Clearly, for both the latency and throughput metrics, the best outcome for the end user is to locate compute capability in close proximity. This eliminates data center hops and allows high speed 5G or fiber optic broadband to deliver the most data (See Figure 5). And edge computing is all about location.

But aside from proximity, characteristics of an edge data center include:

- Located close to users: The primary goal is to reduce latency and increase throughput; physical proximity to end users ensures that roundtrip network time is minimal.

- One part of a bigger network: The edge data center is rarely the only part of the network. There may be larger central data centers that data gets propagated in a “hub-and-spoke” type system. There may also be many edge data centers distributed across some larger geographic area.

- Fast processing power: In addition to minimizing network latency, the processing itself must also be fast to ensure the end users’ requests are completed quickly.

- Space-conscious: To achieve proximity, edge data centers are typically small so that they can fit wherever they need to be located. The exact size can range widely depending on the application and how close it needs to be to the user to get the required latency.

- Easy to maintain: Because Edge data centers are often distributed over wide geographic area, maintaining these sites is not like traditional data center. The edge data centers should be designed with maintainability in mind and with consideration of supply chain (i.e. easy to source components). Given the low latency needs of most applications that rely on edge computing, the cost and downtime of significant maintenance would be prohibitive.

Figure 5

The Benefits of an Edge Data Center

By implementing a network using edge data centers, you afford your users numerous benefits:

1. Provides Faster Service and Increase Bandwidth Over Traditional Data Centers

By locating the data center closer to users, data can be serviced faster than would otherwise be available in a larger remote data center. This is because the data has to travel a shorter distance and likely through fewer network devices such as switches or routers. In addition, edge data centers often have to process smaller portions of a network’s overall data, so the computation is often faster as well.

2. Enables Resilient Networks

Traditional data centers are typically multi-tenant, where many users or businesses house their servers in the same space to cut down on costs, or enterprise facilities that support just one organization. The drawback of these centralized nodes is that down time or interrupted service often results in application outages costing millions. Edge data centers, on the other hand, create a mesh coverage where down time of one data center will be covered by other edge locations. Edge applications and end users are the beneficiary of this improved resiliency.

3. Cost-Effective

By tailoring edge systems to exactly the needed workflow, they don’t necessarily require as much hardware or maintenance as a more traditional data center. This can reduce a lot of unnecessary overhead and makes it much more cost-effective to incrementally expand the network to meet demand.

4. Customizable

The modular nature of edge data centers not only affords cost effectiveness, but also allows for fine-grained customization based on the expected application. For example, an IoT device that processes images may require more GPUs than CPUs , which is typically not standard for a traditional data center.

5. Scalable

By keeping the edge data centers small and modular, the network can expand as needed based on demand. As long as the software is built using a distributed systems architecture — where components operating on different networks or platforms can still communicate with each other — scaling simply requires installing edge data centers into the network; the rest of the system can effectively stay the same. Here, the most important aspect of deployment is the ability to add data center units from a manufacturing capability.

Implementing Scalable Edge Data Centers Using Mission Critical

Edge data centers are key in providing large volume of data at fast speeds for distributed networks and modern cloud applications. However, implementing a cost-effective and maintainable edge center requires having a provider that offers modular components that can be easily implemented and scaled over time.

IE Mission Critical provides custom and turnkey solutions that to meet your infrastructure needs — from all-in-one IT modules, to modular cooling and electrical systems, to skid mounted switchgear. Learn more about Mission Critical to see how you can build successful edge data centers for your network.